The New Old

Led the design and illustration section of an

exhibition about the future of aging at the Design Museum in London.

①

THE BRIEF"The New Old" is an exhibition at the Design Museum in London about the future of aging. Thirty years after the groundbreaking "The New Design for Old", this exhibition explored how design can enhance life in old age. Showcasing innovations from robotic clothing to driverless cars, it reimagined design for aging.

IDEO was tasked with focusing on aging and community.

②

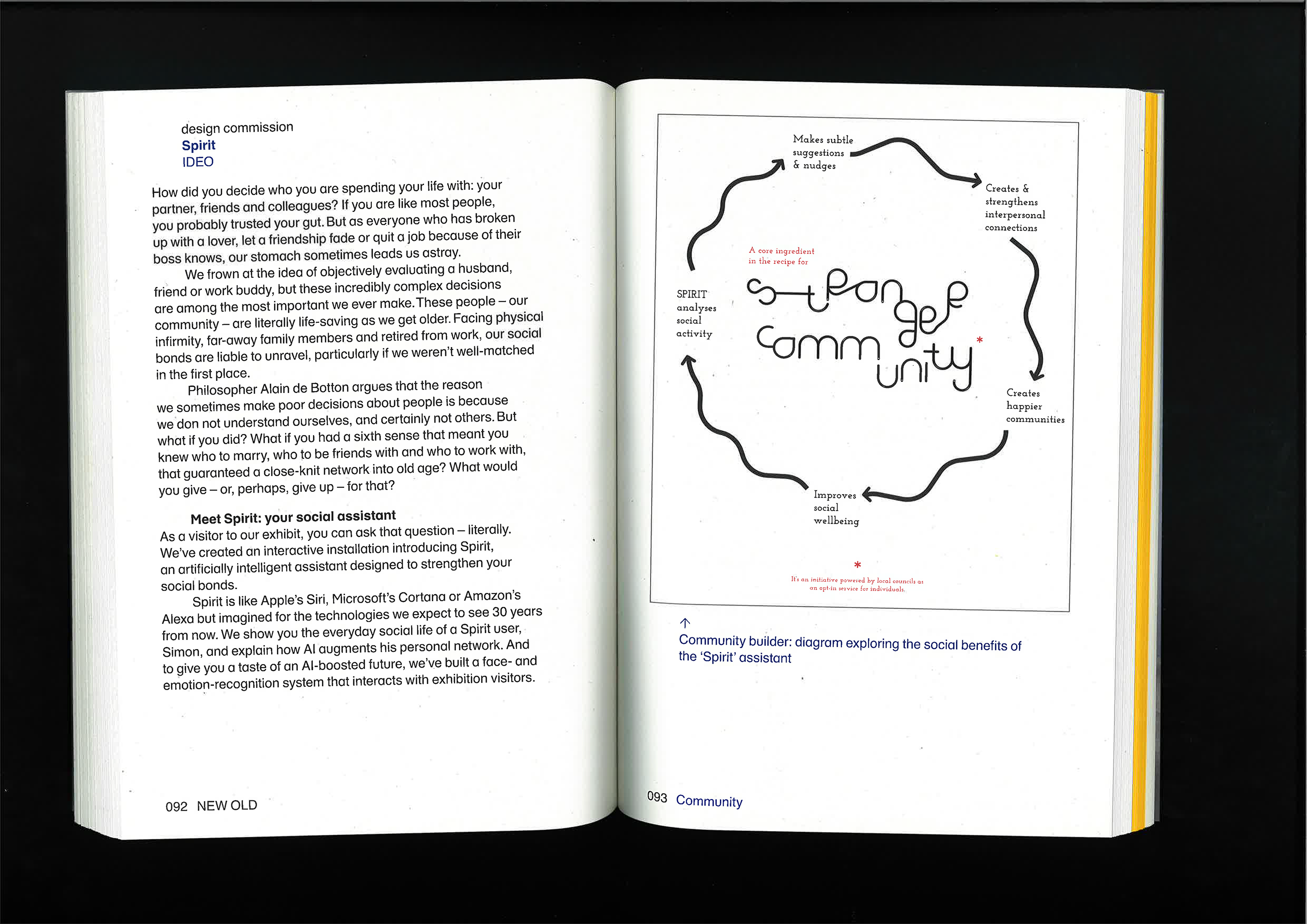

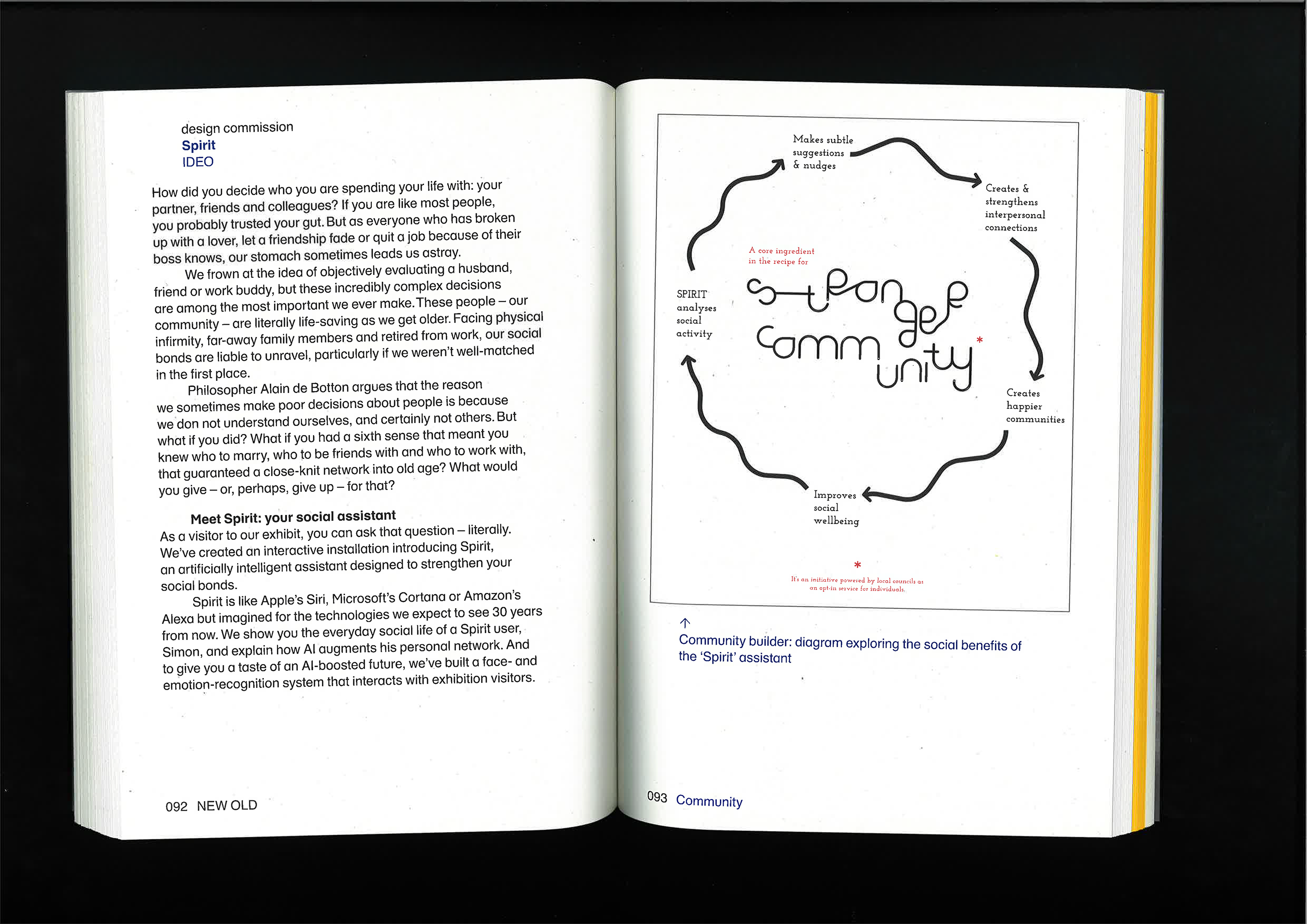

THE CONCEPTOur concept, Spirit, like Apple’s Siri, enhances social bonds through futuristic tech. Our research with the elderly and trends reveal automation's isolating effects on activities like shopping and working. Spirit counters this by facilitating social interaction.

In our envisioned future, Spirit seamlessly integrates into daily life, monitoring interactions and emotions via sensors. Analyzing this data, Spirit identifies factors promoting well-being, enhancing conversations, and connecting potential companions.

Spirit highlights technology's growing role, stressing human-centered design in such services. As emerging tech replaces conventional tools, is vital we reflect on the fundamental human needs that must be kept intact such as social connection.

③

THE VISITOR EXPERIENCEI worked with a small multidisciplinary team of software designers, developers and writers to design a four part exhibition module including a large illustration, a series of sculptures, a screen with 3D art and an interactive booth. I was responsible for project management, exhibition graphics and illustrations.

︎︎︎

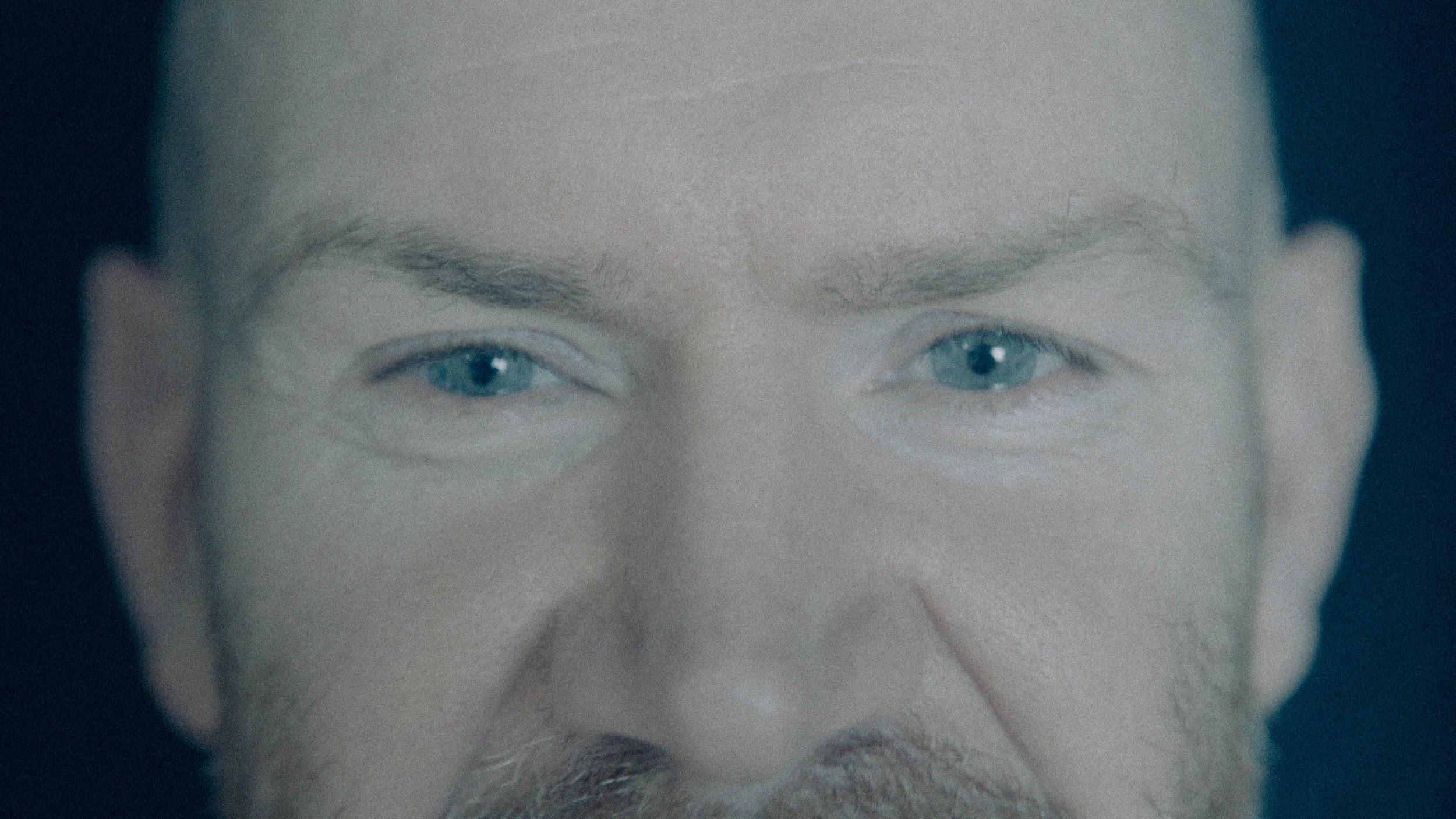

Simon’s Story

We scripted and illustrated the story of 92-year-old Simon, showcasing how Spirit enhanced his social interactions in 2050 through a series of vignettes highlighting Spirit's functionalities integrated into his body.

︎︎︎

Simon’s Body Parts

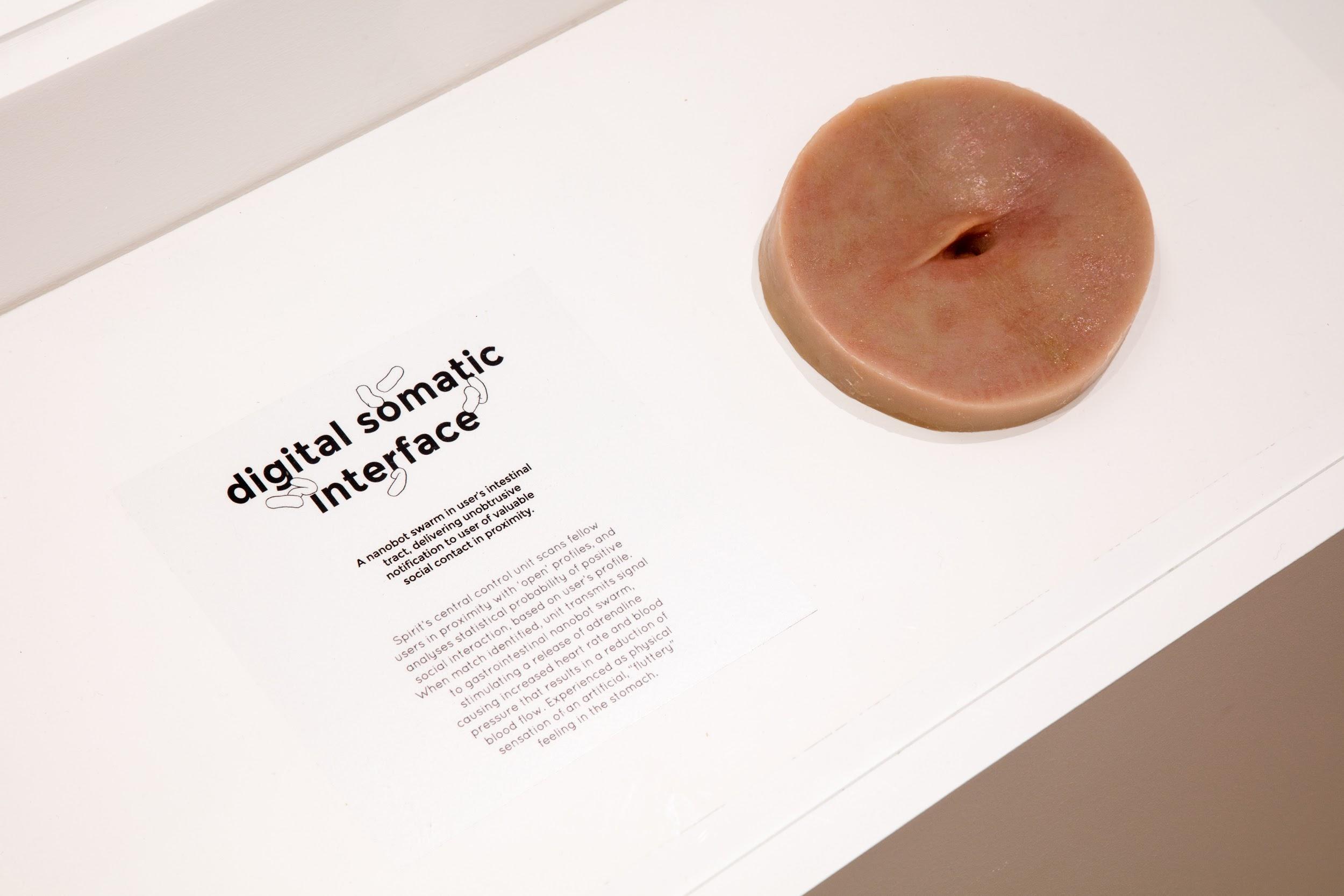

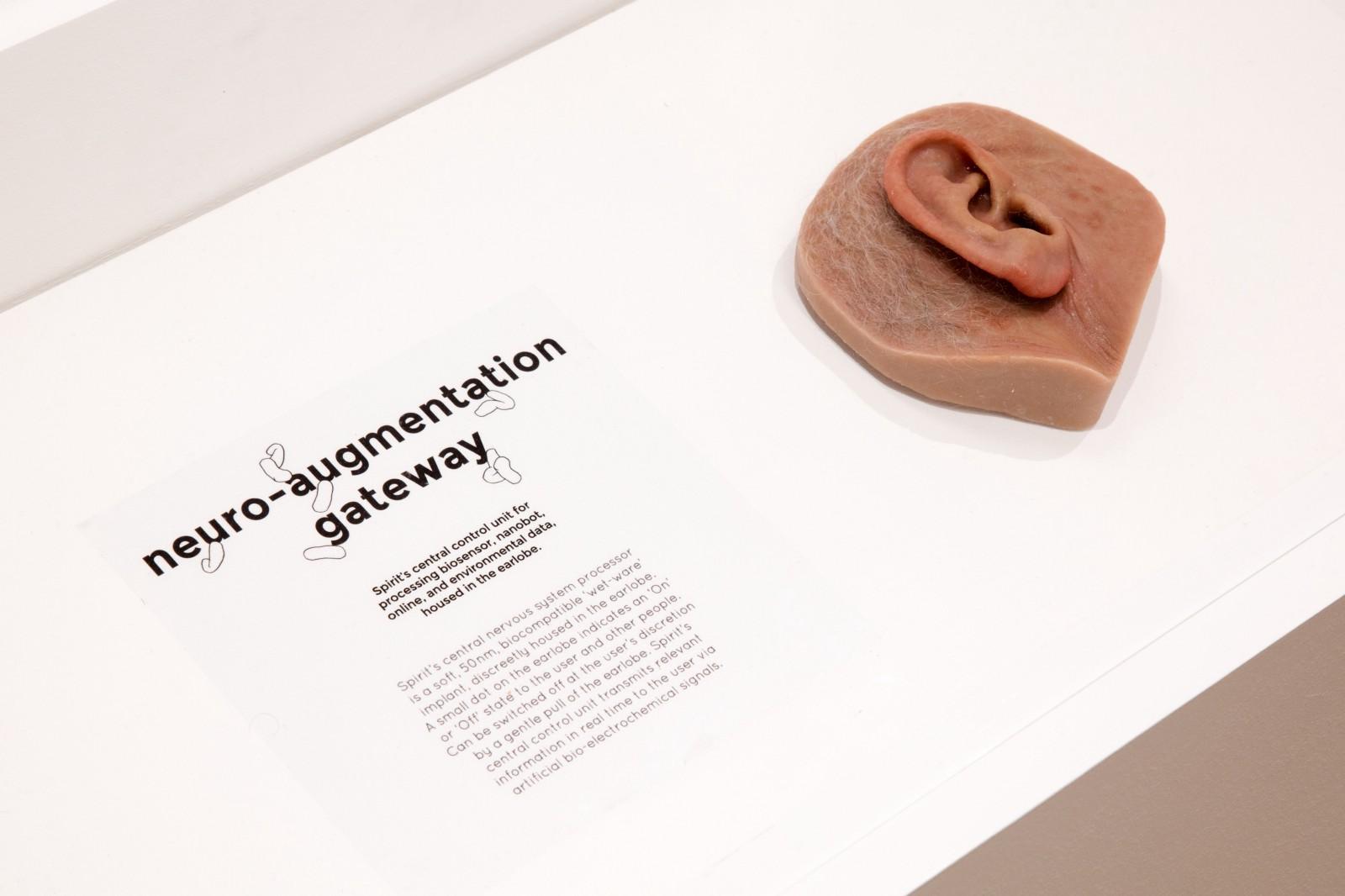

We worked with Dr. Agi Haines to design realistic sculptures representing three body parts, illustrating the key Spirit features linked to Simon’s story.

︎

A Belly a.k.a. Digital somatic interface – A nanobot swarm in user's intestinal tract, delivering unobtrusive notification to user of valuable social contact.

︎

An Ear a.k.a. Neuro-augmentation Gateway – Spirit's central control unit for processing biosensor, nanobot, online and environmental data, housed in the earlobe.

︎

A Hand a.k.a. Organic social interaction display – A micro bio-display showing the user's physical human contact, over the past month.

*Dr. Agi Haines is a design practitioner, and researcher exploring the human body's potential as a material, blending traditional sculptural techniques with emerging technologies to question societal acceptance of bodily malleability.

︎︎︎

Spirit Screen + Booth

This was the interactive part of the exhibition consisting of a screen displaying 3D art and a booth where we hosted Spirit, a machine asking questions to visitors and listening to their answers.

We wanted an interactive booth for three reasons:

- To provide visitors with a glimpse of interacting with an artificial entity

- To gauge people's reactions to the interaction

- To engage in playful experimentation and prototyping.

While current AI technology is still developing its ability to engage in real dialogue with humans, it learns from interactions, much like autonomous cars do with each mile driven.

Unlike popular AI products like Siri and Alexa, which respond to user commands, our Spirit actively asks visitors intimate questions such as what makes them happiest and if they would trust an AI to choose their friends. This reversal of roles sparked our interest and prompted exploration into the future of AI-human interactions.

The exhibit had two states:

︎

In the idle state, visitors talked to Spirit, which displayed quotes from previous visitors and visualized gathered data.

︎

In the interactive state, visitors entered an audio booth where Spirit greeted them and mediated the conversation. It asked questions like "What makes you happiest?" Each answer created a pebble in the visualization, with its attributes determined by the response's length and emotion. The pebble then merged into a collective sea of pebbles from all interactions.

The exhibit faced the challenge of continuous operation for five weeks, relying on a custom script for conversations and a chatbot API for unexpected responses. Despite unreliable voice-to-text functionality, scripted questions were prioritized.

The technical setup included an audio booth with Arduino and iPhone for occupancy detection. The iPhone ran a custom app for voice-to-text conversion and emotion analysis. Data were stored and sent for further processing.